Some time ago, I’ve started developing the backend for my Pregnancy app. To run everything pretty fast 🚀, cheap 💲 and having the option to change the db schema anytime without much pain 💊, datastore was a great solution for me. Later, I wanted to start learning from data and running some queries. The basic ‘select’ queries worked fine, but trying to create more interesting queries which required few joins, was almost impossible mission. Datastore doesn’t support join and nested queries. After making some checks with loading part of the query results into memory and joining programmatically, I found out that this is a really bad approach. The final decision was leaving Datastore and migrating all the data into Google Cloud SQL.

Steps

- Prepare storage bucket

- Export datastore to the bucket

- Download the backup

- Unmarshal and load to model

- Example

Prepare storage bucket

- Open Developer Console

- Go to Storage section

- Create bucket and give it a unique name. For example:

my-migrated-datastore - Click on created bucket and press Create Folder. Let’s call it:

data

Export datastore to the bucket

-

Go to Datastore section

-

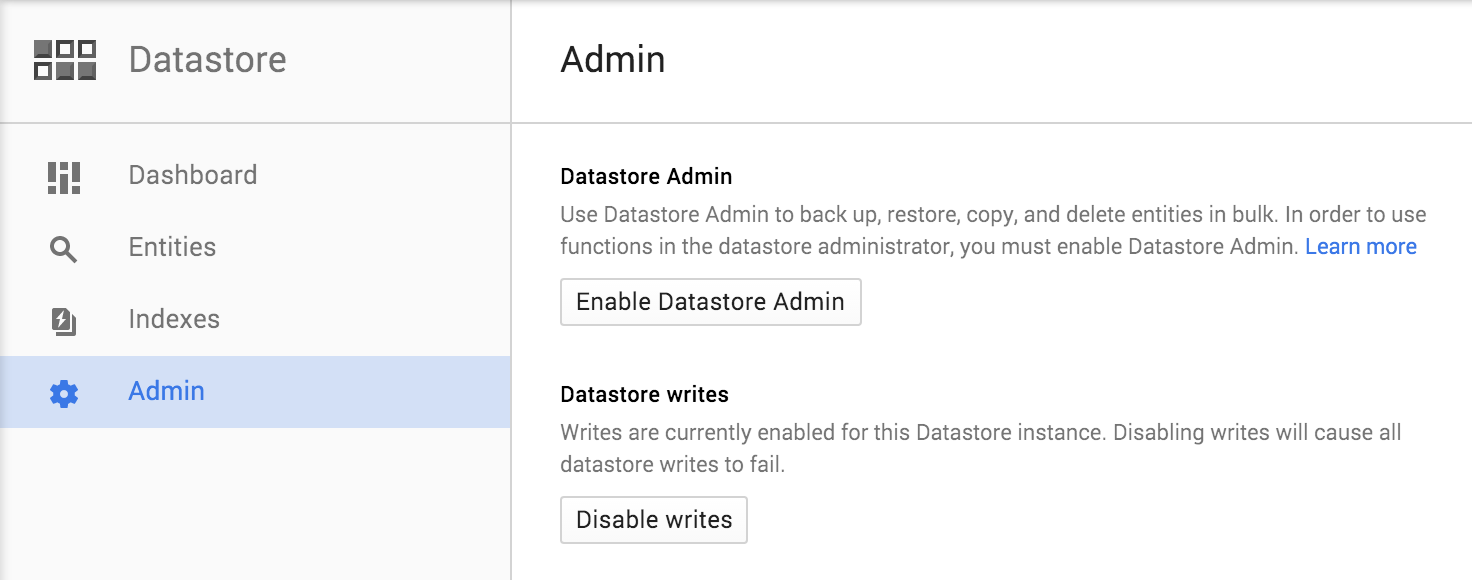

Click on

Adminand Enable Datastore Admin if it’s disabled

-

Click on Open Datastore Admin

-

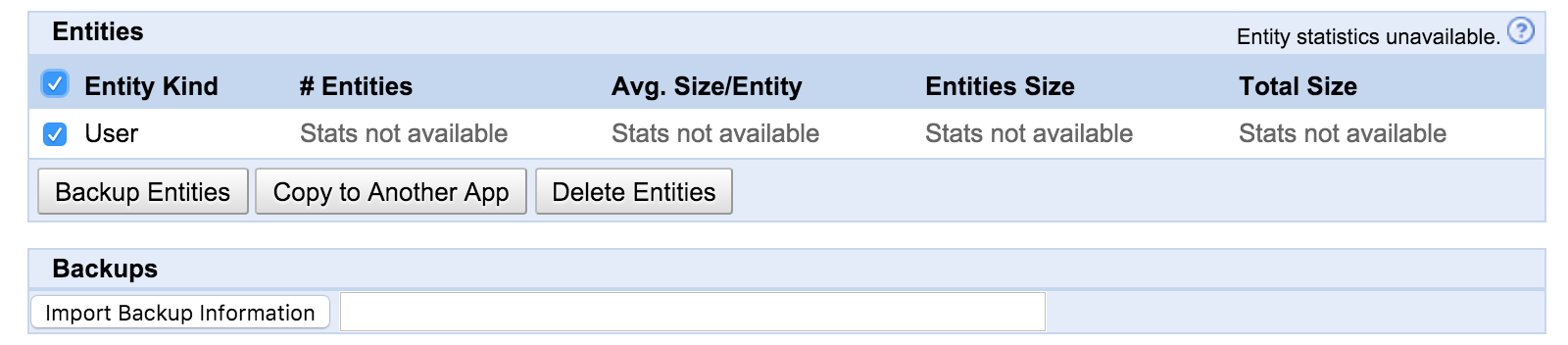

Select entities you want to export

-

Click on Backup Entities

-

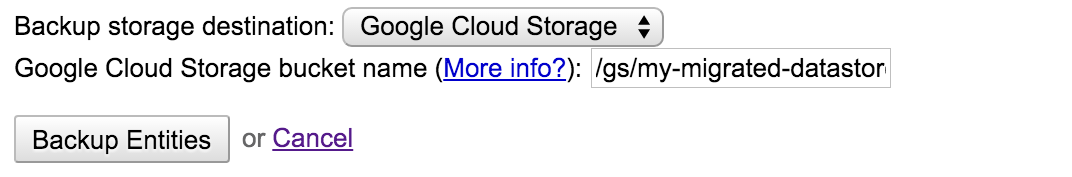

Under

Google Cloud Storage bucket namewrite:/gs/my-migrated-datastore/data. Click on Backup EntitiesThe format of this: /gs/{bucket-name-you-created}/{folder-in-bucket}

-

Click on Back to Datastore Admin and you will see the pending and completed backups. Refresh page from time to time to see the changed status.

If you open the folder data in bucket you’ve created, you will see *.backup_info files and folders per entity.

Download the backup

-

Make sure you have gsutil installed.

-

Download the files from bucket to your machine:

gsutil cp -R gs://my-migrated-datastore/data .The syntax is: gsutil cp -R gs://your_bucket_name/folder /local_target

Unmarshal and load to model

The script is combined of:

Part of original datastore package with some little changes like making the method LoadEntity as exported (public) and removing all dependencies to internal appengine packages.

The backup.go code will unmarshal and load the backup file into the model.

Run it yourself

go get -u github.com/sromku/datastore-to-sql/backup

Load the backup file into model

This script will load the backup file into ‘Profile’ model and print it

import (

"fmt"

"github.com/sromku/datastore-to-sql/backup"

)

func main() {

backupPath := ".../output-0"

backup.Load(backupPath, &Profile{}, nil,

func(res interface{}) {

profile, _ := res.(*Profile) // the loaded model

fmt.Println(profile)

})

}

type Profile struct {

Name string `datastore:"name, noindex"`

Email string `datastore:"email"`

Gender int `datastore:"gender, noindex"`

}

Example

-

Open example folder and run

go run model.go main.go. The script will load backed up file which is located under ‘exported-data’ folder and print SQL script for importing it later. -

This is what will you see:

INSERT INTO `users` VALUES ('Aindrea Tim','aindrea.tim@gmail.com',1); INSERT INTO `users` VALUES ('Alban Lestat','alban.lestat@gmail.com',0); INSERT INTO `users` VALUES ('Erik Erich','erik.erich@gmail.com',0); INSERT INTO `users` VALUES ('Luisita Karolina','luisita.karolina@gmail.com',1);

🍉 From this point, you can import it and do whatever you need. The main pain for me, was getting until this point. By having this logic and code, I did all needed adjustments and created final SQL script with all inserts, unique fields, tables and etc.. I will leave it for you :)